基于 Kubernetes 1.17.16 搭建 Jenkins 2.253 动态构建环境

前言

动态构建是DevOps中非常重要的一环,它可以帮助团队大大提高工作效率,节省重复劳动的时间成本。

要实现动态构建,就需要呼唤我们的大杀器 - Kubernetes和Jenkins!

Kubernetes是一个容器编排管理系统,像一个灵活多变的调度大师,可以动态管理容器资源。

而Jenkins则是我们的老朋友,代码搬运工,可以在Kubernetes的容器资源上执行构建任务。

本教程将利用Kubernetes提供的容器资源,在其上动态运行Jenkins master和多个Jenkins slave,来实现自动化的动态构建。

接下来我会一步步介绍:

- 怎么在集群上部署

Jenkins Master。 - 如何配置

Jenkins Slave进行动态伸缩。 - 进行一个简单的构建流程测试。

部署Jenkins Master

步骤分解

- 部署

NFS服务器。 - 部署

k8s-nfs客户端。 - 部署

Jenkins Master节点。

开始部署

NFS服务器的部署省略,只需要集群内能访问即可。

部署k8s-nfs客户端

先做一些 RBAC 和认证准备,命名为01-nfs-rbac.yaml。

# 创建一个名为nfs-kube-ops的ServiceAccount在kube-ops命名空间中。

# 这会被NFS客户端使用。

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-kube-ops

namespace: kube-ops

---

# 定义一个ClusterRole,授予NFS客户端需要的权限,包括创建/删除PersistentVolumes,更新PersistentVolumeClaims等。

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-kube-ops-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["services"]

verbs: ["get", "watch", "list"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["get","list","patch","watch","create","delete"]

---

# 绑定ServiceAccount到ClusterRole。

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-kube-ops

subjects:

- kind: ServiceAccount

name: nfs-kube-ops

namespace: kube-ops

roleRef:

kind: ClusterRole

name: nfs-kube-ops-runner

apiGroup: rbac.authorization.k8s.io

---

# 定义一个Role,授予修改Endpoints的权限,用于Leader选举。

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-kube-ops

namespace: kube-ops

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

# 绑定Role到ServiceAccount。

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-kube-ops

namespace: kube-ops

subjects:

- kind: ServiceAccount

name: nfs-kube-ops

namespace: kube-ops

roleRef:

kind: Role

name: leader-locking-nfs-kube-ops

apiGroup: rbac.authorization.k8s.io

定义StorageClass对象,命名为02-nfs-class.yaml。

# nfs-jenkins-kube-ops对应的是nfs deployment中的PROVISIONER_NAME变量名

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-jenkins-kube-ops

provisioner: nfs-jenkins-kube-ops

allowVolumeExpansion: true

parameters:

archiveOnDelete: "true"

定义一个NFS动态卷供应器(可以理解为NFS客户端),用来提供动态的NFS PersistentVolume,命名为03-nfs-deployment.yaml。

为什么需要NFS动态卷供应器?

动态卷供应器(Provisioner)之所以需要被创建和部署,是因为它实现了存储卷的创建和管理逻辑。

在Kubernetes中,StorageClass只是描述存储配置的一个资源对象,本身不具备动态创建存储卷的能力。

简单来说,StorageClass只是声明存储配置和类信息,而NFS动态卷供应器实现了实际的存储操作和供应逻辑。

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-jenkins-kube-ops

labels:

app: nfs-jenkins-kube-ops

namespace: kube-ops

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-jenkins-kube-ops

template:

metadata:

labels:

app: nfs-jenkins-kube-ops

spec:

serviceAccountName: nfs-kube-ops

containers:

- name: nfs-jenkins-kube-ops

image: dyrnq/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

# 这里的nfs-jenkins-kube-ops对应的是nfs StorageClass中的nfs-jenkins-kube-ops

- name: PROVISIONER_NAME

value: nfs-jenkins-kube-ops

# NFS地址

- name: NFS_SERVER

value: 10.200.1.112

# NFS目录路径

- name: NFS_PATH

value: /data/k8s/kube-ops/jenkins

volumes:

# 同上意思

- name: nfs-client-root

nfs:

server: 10.200.1.112

path: /data/k8s/kube-ops/jenkins

部署Jenkins Master节点

创建集群管理员用户(也可适当限制下权限),命名为04-jenkins-account.yaml。

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: jenkins-master

name: jenkins-admin

namespace: kube-ops

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: jenkins-admin

labels:

k8s-app: jenkins-master

subjects:

- kind: ServiceAccount

name: jenkins-admin

namespace: kube-ops

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

创建Jenkins Master的PVC卷,命名为05-jenkins-pvc.yaml。

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: jenkins-kube-ops

namespace: kube-ops

annotations:

nfs.io/storage-path: "jenkins-kube-ops"

spec:

# nfs-jenkins-kube-ops为刚定义的NFS StorageClass

storageClassName: nfs-jenkins-kube-ops

accessModes:

- ReadWriteMany

resources:

requests:

storage: 500Gi

根据实际情况构建Jenkins Master镜像,以下是我的Dockerfile,仅供参考。

我这里构建的镜像名为:10.200.0.143:80/wenwo/devops/jenkins-master:v1

FROM jenkins/jenkins:2.253

LABEL maintainer="runfa.li"

# 切换到 root 账户进行操作

USER root

# 安装 maven

COPY maven.tar.gz .

# 安装node

COPY node_bin /usr/local/node_bin

COPY node /usr/local/node

RUN tar -xvf maven.tar.gz && \

mkdir -p /data && \

mv maven /data && \

rm -f maven.tar.gz && \

chmod 755 -R /data/maven/bin/ && \

chmod 755 -R /usr/local/node_bin && \

chmod 755 -R /usr/local/node/lib/node_modules/cnpm/bin && \

chmod 755 -R /usr/local/node/lib/node_modules/n/bin && \

chmod 755 -R /usr/local/node/lib/node_modules/npm/bin && \

chmod 755 -R /usr/local/node/lib/node_modules/pm2/bin && \

chmod 755 -R /usr/local/node/lib/node_modules/@vue/cli/bin && \

chmod 755 -R /usr/local/node/lib/node_modules/@vue/cli-service/bin && \

chmod 755 /usr/local/node/bin/node && \

ln -sf /data/maven/bin/mvn /usr/bin/mvn && \

ln -sf /usr/local/node/bin/node /usr/bin/node && \

ln -sf /usr/local/node/lib/node_modules/cnpm/bin/cnpm /usr/bin/cnpm && \

ln -sf /usr/local/node/lib/node_modules/n/bin/n /usr/bin/n && \

ln -sf /usr/local/node/lib/node_modules/npm/bin/npm-cli.js /usr/bin/npm && \

ln -sf /usr/local/node/lib/node_modules/npm/bin/npx-cli.js /usr/bin/npx && \

ln -sf /usr/local/node/lib/node_modules/pm2/bin/pm2 /usr/bin/pm2 && \

ln -sf /usr/local/node/lib/node_modules/pm2/bin/pm2-dev /usr/bin/pm2-dev && \

ln -sf /usr/local/node/lib/node_modules/pm2/bin/pm2-docker /usr/bin/pm2-docker && \

ln -sf /usr/local/node/lib/node_modules/pm2/bin/pm2-runtime /usr/bin/pm2-runtime && \

ln -sf /usr/local/node/lib/node_modules/@vue/cli/bin/vue.js /usr/bin/vue && \

ln -sf /usr/local/node/lib/node_modules/@vue/cli-service/bin/vue-cli-service.js /usr/bin/vue-cli-service && \

mkdir -p /data/repo

编写Jenkins Master Deployment yaml文件,命名为06-jenkins-deployment.yaml。

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins-master

namespace: kube-ops

spec:

selector:

matchLabels:

app: jenkins-master

template:

metadata:

labels:

app: jenkins-master

spec:

terminationGracePeriodSeconds: 10

serviceAccount: jenkins-admin

containers:

- name: jenkins-master

# 这是我自行构建的镜像

image: 10.200.0.143:80/wenwo/devops/jenkins-master:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

name: web

protocol: TCP

- containerPort: 50000

name: agent

protocol: TCP

resources:

limits:

memory: 16Gi

requests:

memory: 1Gi

livenessProbe:

httpGet:

path: /login

port: 8080

initialDelaySeconds: 60

timeoutSeconds: 5

failureThreshold: 12

readinessProbe:

httpGet:

path: /login

port: 8080

initialDelaySeconds: 60

timeoutSeconds: 5

failureThreshold: 12

volumeMounts:

- name: jenkinshome

subPath: jenkins-master

mountPath: /var/jenkins_home

env:

- name: LIMITS_MEMORY

valueFrom:

resourceFieldRef:

resource: limits.memory

divisor: 1Mi

- name: TZ

value: Hongkong

- name: JAVA_OPTS

value: -Xmx$(LIMITS_MEMORY)m -XshowSettings:vm -Dhudson.slaves.NodeProvisioner.initialDelay=0 -Dhudson.slaves.NodeProvisioner.MARGIN=50 -Dhudson.slaves.NodeProvisioner.MARGIN0=0.85 -Duser.timezone=Asia/Shanghai

securityContext:

fsGroup: 1000

# 把刚创建的PVC卷挂载上去

volumes:

- name: jenkinshome

persistentVolumeClaim:

claimName: jenkins-kube-ops

编写编写Jenkins Master Services yaml文件,命名为07-jenkins-services.yaml。

apiVersion: v1

kind: Service

metadata:

name: jenkins-master

namespace: kube-ops

labels:

app: jenkins-master

spec:

selector:

app: jenkins-master

# 这里的作用类似端口映射,这是个公司内网IP地址

# 这样配置之后公司内网可以直接访问http://10.200.1.146:18080/打开jenkins

externalIPs:

- 10.200.1.146

ports:

- name: web

port: 18080

targetPort: 8080

- name: agent

port: 50000

targetPort: 50000

按顺序执行yaml文件

kubectl apply -f 01-nfs-rbac.yaml

kubectl apply -f 02-nfs-class.yaml

kubectl apply -f 03-nfs-deployment.yaml

kubectl apply -f 04-jenkins-account.yaml

kubectl apply -f 05-jenkins-pvc.yaml

kubectl apply -f 06-jenkins-deployment.yaml

kubectl apply -f 07-jenkins-services.yaml

安装需要的插件(比如Git,Kubernetes,Pipeline等)

我这里因为是需要把老Jenkins迁移到K8S,所以是直接把相关目录还原到了NFS机器上的/data/k8s/kube-ops/jenkins,记得还原前需要先停止Jenkins Master Deployment。

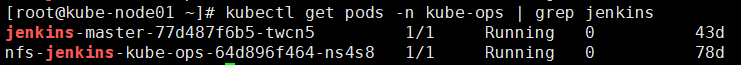

部分截图

部署Jenkins Slave

步骤分解

- 构建

Jenkins Slave镜像。 - 配置

Jenkins Master的Kubernetes插件。 - 修改项目

Jenkinsfile。

开始操作

构建Jenkins Slave镜像

这是我的Dockerfile,请按需操作、修改等,此处仅供参考。

构建的镜像名为:10.200.0.143:80/wenwo/devops/jenkins-slave:v4

FROM jenkins/inbound-agent:latest-jdk8

LABEL maintainer="runfa.li"

# 切换到 root 账户进行操作

USER root

COPY maven.tar.gz .

COPY node_modules /usr/local/lib/node_modules

COPY static_push_oss /usr/local/bin/static_push_oss

COPY .npmrc /root/.npmrc

COPY .cnpmrc /root/.cnpmrc

COPY .ossutilconfig /root/.ossutilconfig

COPY sources.list .

RUN apt-get install -y apt-transport-https ca-certificates && \

mv -f sources.list /etc/apt/sources.list && \

echo "Acquire::http::Pipeline-Depth \"0\";" > /etc/apt/apt.conf.d/99nopipelining && \

apt-get update && \

apt-get install -y curl gnupg zip unzip gconf-service libxext6 libxfixes3 libxi6 libxrandr2 \

libxrender1 libcairo2 libcups2 libdbus-1-3 libexpat1 libfontconfig1 libgcc1 libgconf-2-4 libgdk-pixbuf2.0-0 libglib2.0-0 \

libgtk-3-0 libnspr4 libpango-1.0-0 libpangocairo-1.0-0 libstdc++6 libx11-6 libx11-xcb1 libxcb1 libxcomposite1 libxcursor1 \

libxdamage1 libxss1 libxtst6 libappindicator1 libnss3 libasound2 libatk1.0-0 libc6 fonts-liberation lsb-release xdg-utils wget && \

install -m 0755 -d /etc/apt/keyrings && \

curl -fsSL https://download.docker.com/linux/debian/gpg | gpg --dearmor -o /etc/apt/keyrings/docker.gpg && \

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/debian \

"$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null && \

apt-get update && \

curl -sL https://deb.nodesource.com/setup_14.x | bash - && \

apt-get install -y nodejs docker-ce && \

tar -xvf maven.tar.gz && \

mkdir -p /data && \

mv maven /data && \

rm -f maven.tar.gz && \

npm install cnpm -g && \

node -v && \

npm -v && \

cnpm -v && \

chmod 755 -R /data/maven/bin/ && \

chmod 755 -R /usr/local/lib/node_modules/n/bin && \

chmod 755 -R /usr/local/lib/node_modules/npm/bin && \

chmod 755 -R /usr/local/lib/node_modules/nrm/cli.js && \

chmod 755 -R /usr/local/lib/node_modules/pnpm/bin && \

chmod 755 /usr/local/bin/static_push_oss && \

ln -sf /data/maven/bin/mvn /usr/bin/mvn && \

ln -sf /usr/local/lib/node_modules/n/bin/n /usr/local/bin/n && \

ln -sf /usr/local/lib/node_modules/npm/bin/npx-cli.js /usr/local/bin/npx && \

ln -sf /usr/local/lib/node_modules/nrm/cli.js /usr/local/bin/nrm && \

ln -sf /usr/local/lib/node_modules/pnpm/bin/pnpm.cjs /usr/local/bin/pnpm && \

ln -sf /usr/local/lib/node_modules/pnpm/bin/pnpx.cjs /usr/local/bin/pnpx && \

mkdir -p /data/repo && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

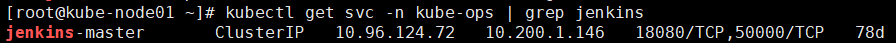

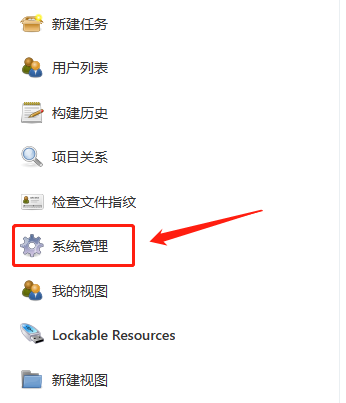

配置Jenkins Master的Kubernetes插件

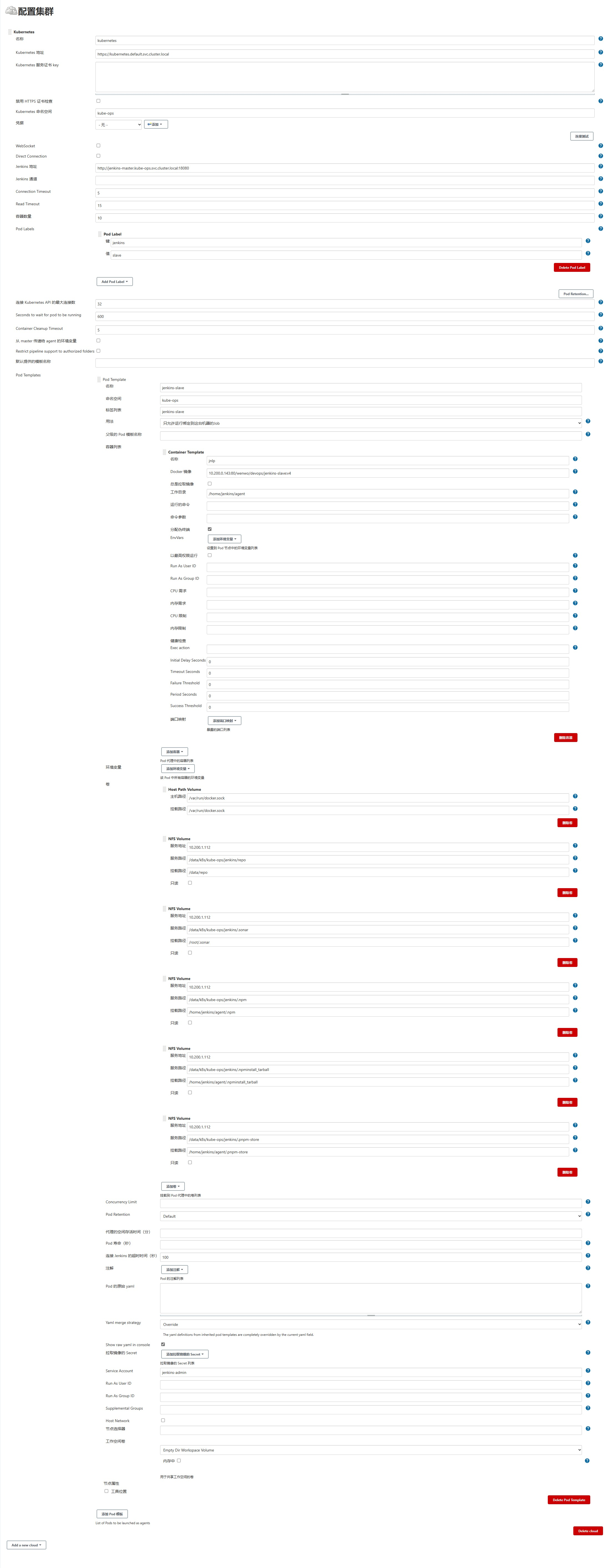

此处通过截图介绍,配置之前,请先自行安装好Kubernetes插件。

简单介绍下长图的内容(依次往下)

kubernetes 块

kubernetes地址:https://kubernetes.default.svc.cluster.localnamespace填kube-ops,然后点击 连接测试,如果出现Connection test successful的提示信息证明Jenkins Master已经可以和Kubernetes集群正常通信Jenkins Master URL地址:http://jenkins-master.kube-ops.svc.cluster.local:18080(注意关键的svc名字,namespace名字,svc对外的端口)- 其他默认,

Pod Labels可以不填

Pod Templates 块

- 分别是名称、命名空间、标签,这几个可以任意填写,但需要记下来

Container Template中名称建议填写jnlp,貌似是官方BUG,填写其他可能会出错;然后是Docker镜像,这里是指刚构建的Jenkins Slave镜像;工作目录默认即可- 然后是挂载卷,第一个

/var/run/docker.sock必须要,注意是Host Path Volume;后面的按需挂载即可 Service Account必须填写刚创建的集群管理员用户jenkins-admin

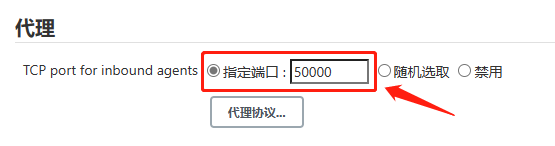

还需要设置固定代理端口号

这里的端口号由Jenkins Master Services配置的agent端口号决定

而agent端口号在Jenkins Master Deployment做了指定

【系统管理】—【全局安全配置】

修改项目Jenkinsfile

不多说,直接贴出来

def registry = "10.200.0.143:80"

def docker_registry_auth = "26fa913d-03b1-4b1f-a65c-8d79ad21ccd1"

pipeline {

agent {

kubernetes {

label "jenkins-slave"

cloud 'kubernetes'

yaml '''

apiVersion: v1

kind: Pod

metadata:

name: jenkins-slave

spec:

containers:

- name: jnlp

image: 10.200.0.143:80/wenwo/devops/jenkins-slave:v4

'''

}

}

environment {

def config = readJSON file: 'package.json'

VERSION = "${config.version}"

PORT=56005

NAME="${env.JOB_NAME}"

Build_Id="${env.BUILD_ID}"

TimeStr = new Date().format('yyyyMMddHHmm')

}

stages {

stage("编译打包") {

steps {

script {

def ProjectBranch = env.GIT_BRANCH.split("/")[1]

def START_SCRIPT= ""

if (ProjectBranch == "master"){

def START_SCRIPT_UAT= "release:uat"

sh "npm install && npm run build:uat"

sh 'tar czf Data-uat-${VERSION}.tgz .nuxt config static node_modules nuxt.config.js package.json'

sh 'tar czf Static-Data-${VERSION}.tgz .nuxt'

sh "echo '' > Dockerfile"

sh "echo 'FROM registry.cn-beijing.aliyuncs.com/xxx/nodejs:v14.18.0' >> Dockerfile"

sh "echo 'COPY Data-uat-${VERSION}.tgz Data-uat-${VERSION}.tgz' >> Dockerfile"

sh "echo 'RUN [\"mkdir\",\"-p\",\"/www/\"]' >> Dockerfile"

sh "echo 'RUN [\"tar\",\"-xf\",\"Data-uat-${VERSION}.tgz\",\"-C\",\"/www/\"]' >> Dockerfile"

sh "echo 'RUN [\"rm\",\"-f\",\"Data-uat-${VERSION}.tgz\"]' >> Dockerfile"

sh "echo 'WORKDIR \"/www/\"' >> Dockerfile"

sh "echo 'ENTRYPOINT [\"npm\",\"run\",\"${START_SCRIPT_UAT}\"]' >> Dockerfile"

sh "echo 'EXPOSE ${PORT}' >> Dockerfile"

sh 'cat Dockerfile'

sh "docker build -t h5/${NAME}:${VERSION}-aut-${TimeStr} ."

sh "ls -alh "

sh "ls -alh .nuxt/dist/client/css"

sh "rm -rf Data-uat-${VERSION}.tgz node_modules/.cache"

sh "ls -alh "

START_SCRIPT = "release"

sh "npm run build"

sh "ls -alh .nuxt/dist/client/css"

}else if (ProjectBranch == "rebuild-test"){

START_SCRIPT = "release:rebuild-test"

sh "npm install && npm run build:rebuild-test"

}else if (ProjectBranch == "test"){

START_SCRIPT = "release:test"

sh "npm install && npm run build:test"

}else if (ProjectBranch == "rebuild-dev"){

START_SCRIPT = "release:rebuild-dev"

sh "npm install && npm run build:rebuild-dev"

}else if (ProjectBranch == "uat"){

}else {

START_SCRIPT = "release:dev"

sh "npm install && npm run build:dev"

}

echo VERSION

sh 'tar czf Data-${VERSION}.tgz .nuxt config static node_modules nuxt.config.js package.json'

sh 'tar czf Static-Data-${VERSION}.tgz .nuxt'

def projectBranch = env.GIT_BRANCH.split("/")[1]

sh "echo '' > Dockerfile"

sh "echo 'FROM registry.cn-beijing.aliyuncs.com/xxx/nodejs:v14.18.0' >> Dockerfile"

sh "echo 'COPY Data-${VERSION}.tgz Data-${VERSION}.tgz' >> Dockerfile"

sh "echo 'RUN [\"mkdir\",\"-p\",\"/www/\"]' >> Dockerfile"

sh "echo 'RUN [\"tar\",\"-xf\",\"Data-${VERSION}.tgz\",\"-C\",\"/www/\"]' >> Dockerfile"

sh "echo 'RUN [\"rm\",\"-f\",\"Data-${VERSION}.tgz\"]' >> Dockerfile"

sh "echo 'WORKDIR \"/www/\"' >> Dockerfile"

sh "echo 'ENTRYPOINT [\"npm\",\"run\",\"${START_SCRIPT}\"]' >> Dockerfile"

sh "echo 'EXPOSE ${PORT}' >> Dockerfile"

sh 'cat Dockerfile'

sh "docker build -t h5/${NAME}:${VERSION}-${projectBranch} ."

}

}

}

stage("上传镜像") {

steps {

script {

def projectBranch = env.GIT_BRANCH.split("/")[1]

def imageName="${registry}/wenwo/${NAME}:${VERSION}-${projectBranch}-${env.BUILD_ID}-${TimeStr}"

def imageNameUat=""

if (projectBranch == "master"){

withCredentials([usernamePassword(credentialsId: "${docker_registry_auth}", passwordVariable: 'password', usernameVariable: 'username')]){

imageNameUat="${registry}/wenwo/${NAME}:${VERSION}-uat-${env.BUILD_ID}-${TimeStr}"

sh "docker login ${registry} -u ${username} -p ${password}"

sh "docker tag h5/${NAME}:${VERSION}-aut-${TimeStr} ${imageNameUat}"

sh "docker push ${imageNameUat}"

sh "docker rmi h5/${NAME}:${VERSION}-aut-${TimeStr} ${imageNameUat}"

}

}

withCredentials([usernamePassword(credentialsId: "${docker_registry_auth}", passwordVariable: 'password', usernameVariable: 'username')]){

sh "docker login ${registry} -u ${username} -p ${password}"

sh "docker tag h5/${NAME}:${VERSION}-${projectBranch} ${imageName}"

sh "docker push ${imageName}"

sh "docker rmi h5/${NAME}:${VERSION}-${projectBranch} ${imageName}"

}

}

}

}

stage("清理空间") {

steps {

sh "ls -al"

deleteDir()

sh "ls -al"

}

}

}

post {

failure {

echo "failure"

deleteDir()

}

}

}

这是原始的,参考下可知如何改造了

pipeline {

agent any

environment {

def config = readJSON file: 'package.json'

VERSION = "${config.version}"

PORT=56005

NAME="${env.JOB_NAME}"

Build_Id="${env.BUILD_ID}"

TimeStr = new Date().format('yyyyMMddHHmm')

}

stages {

stage("编译打包") {

steps {

script {

def ProjectBranch = env.GIT_BRANCH.split("/")[1]

def START_SCRIPT= ""

if (ProjectBranch == "master"){

def START_SCRIPT_UAT= "release:uat"

sh "npm install && npm run build:uat"

sh 'tar czf Data-uat-${VERSION}.tgz .nuxt config static node_modules nuxt.config.js package.json'

sh 'tar czf Static-Data-${VERSION}.tgz .nuxt'

sh "echo '' > Dockerfile"

sh "echo 'FROM registry.cn-beijing.aliyuncs.com/xxx/nodejs:v14.18.0' >> Dockerfile"

sh "echo 'COPY Data-uat-${VERSION}.tgz Data-uat-${VERSION}.tgz' >> Dockerfile"

sh "echo 'RUN [\"mkdir\",\"-p\",\"/www/\"]' >> Dockerfile"

sh "echo 'RUN [\"tar\",\"-xf\",\"Data-uat-${VERSION}.tgz\",\"-C\",\"/www/\"]' >> Dockerfile"

sh "echo 'RUN [\"rm\",\"-f\",\"Data-uat-${VERSION}.tgz\"]' >> Dockerfile"

sh "echo 'WORKDIR \"/www/\"' >> Dockerfile"

sh "echo 'ENTRYPOINT [\"npm\",\"run\",\"${START_SCRIPT_UAT}\"]' >> Dockerfile"

sh "echo 'EXPOSE ${PORT}' >> Dockerfile"

sh 'cat Dockerfile'

sh "docker build -t h5/${NAME}:${VERSION}-aut-${TimeStr} ."

sh "ls -alh "

sh "ls -alh .nuxt/dist/client/css"

sh "rm -rf Data-uat-${VERSION}.tgz node_modules/.cache"

sh "ls -alh "

START_SCRIPT = "release"

sh "npm run build"

sh "ls -alh .nuxt/dist/client/css"

}else if (ProjectBranch == "rebuild-test"){

START_SCRIPT = "release:rebuild-test"

sh "npm install && npm run build:rebuild-test"

}else if (ProjectBranch == "test"){

START_SCRIPT = "release:test"

sh "npm install && npm run build:test"

}else if (ProjectBranch == "rebuild-dev"){

START_SCRIPT = "release:rebuild-dev"

sh "npm install && npm run build:rebuild-dev"

}else if (ProjectBranch == "uat"){

}else {

START_SCRIPT = "release:dev"

sh "npm install && npm run build:dev"

}

echo VERSION

sh 'tar czf Data-${VERSION}.tgz .nuxt config static node_modules nuxt.config.js package.json'

sh 'tar czf Static-Data-${VERSION}.tgz .nuxt'

def projectBranch = env.GIT_BRANCH.split("/")[1]

sh "echo '' > Dockerfile"

sh "echo 'FROM registry.cn-beijing.aliyuncs.com/xxx/nodejs:v14.18.0' >> Dockerfile"

sh "echo 'COPY Data-${VERSION}.tgz Data-${VERSION}.tgz' >> Dockerfile"

sh "echo 'RUN [\"mkdir\",\"-p\",\"/www/\"]' >> Dockerfile"

sh "echo 'RUN [\"tar\",\"-xf\",\"Data-${VERSION}.tgz\",\"-C\",\"/www/\"]' >> Dockerfile"

sh "echo 'RUN [\"rm\",\"-f\",\"Data-${VERSION}.tgz\"]' >> Dockerfile"

sh "echo 'WORKDIR \"/www/\"' >> Dockerfile"

sh "echo 'ENTRYPOINT [\"npm\",\"run\",\"${START_SCRIPT}\"]' >> Dockerfile"

sh "echo 'EXPOSE ${PORT}' >> Dockerfile"

sh 'cat Dockerfile'

sh "docker build -t h5/${NAME}:${VERSION} ."

}

}

}

stage("上传镜像") {

steps {

script {

def projectBranch = env.GIT_BRANCH.split("/")[1]

def imageName="10.200.0.143:80/wenwo/${NAME}:${VERSION}-${projectBranch}-${env.BUILD_ID}-${TimeStr}"

def imageNameUat=""

if (projectBranch == "master"){

imageNameUat="10.200.0.143:80/wenwo/${NAME}:${VERSION}-uat-${env.BUILD_ID}-${TimeStr}"

sh "docker tag h5/${NAME}:${VERSION}-aut-${TimeStr} ${imageNameUat}"

sh "docker push ${imageNameUat}"

sh "docker rmi h5/${NAME}:${VERSION}-aut-${TimeStr} ${imageNameUat}"

}

sh "docker tag h5/${NAME}:${VERSION} ${imageName}"

sh "docker push ${imageName}"

sh "docker rmi h5/${NAME}:${VERSION} ${imageName}"

}

}

}

stage("清理空间") {

steps {

sh "ls -al"

deleteDir()

sh "ls -al"

}

}

}

post {

failure {

echo "failure"

deleteDir()

}

}

}

对比可发现,总体来讲是增加了下面这一小块

# 下面两段指定了harbor地址与在jenkins-master上增加的harbor的账号密码的ID

def registry = "10.200.0.143:80"

def docker_registry_auth = "26fa913d-03b1-4b1f-a65c-8d79ad21ccd1"

pipeline {

# agent模块需要指定label为Pod Templates中填写的标签名

# cloud需要填写kubernetes块中的名称

# 紧跟着是一个简单yaml模板文件,基本与Pod Templates中填写的信息一致

agent {

kubernetes {

label "jenkins-slave"

cloud 'kubernetes'

yaml '''

apiVersion: v1

kind: Pod

metadata:

name: jenkins-slave

spec:

containers:

- name: jnlp

image: 10.200.0.143:80/wenwo/devops/jenkins-slave:v4

'''

}

}

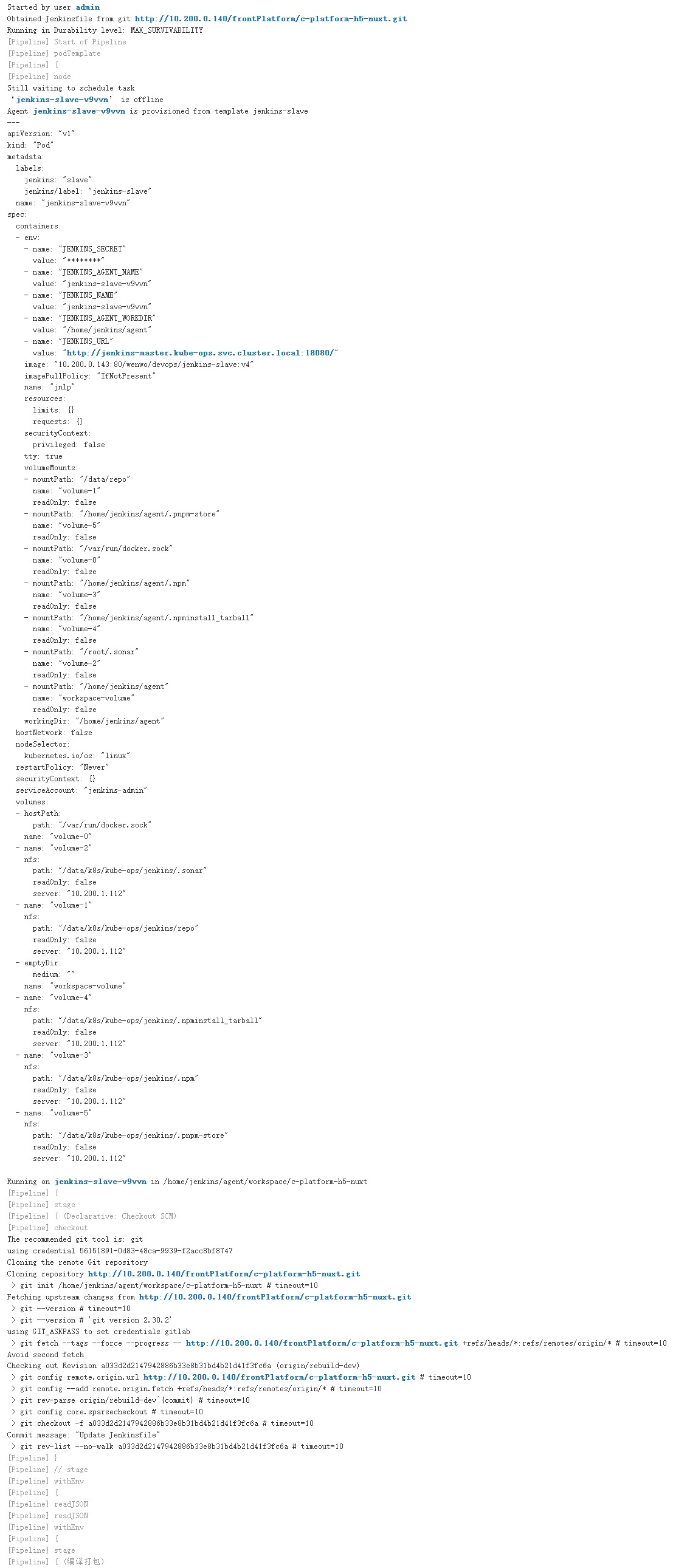

测试构建

找个项目构建

我这里用的是Pipeline script from SCM的方式。

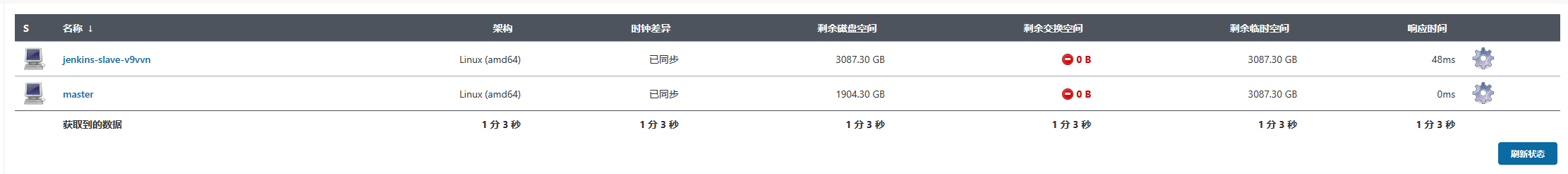

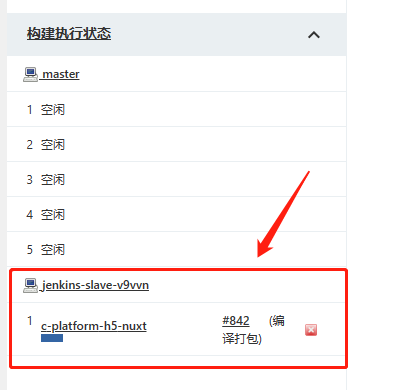

点了构建后,先看截图。

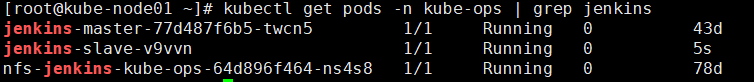

构建进行时

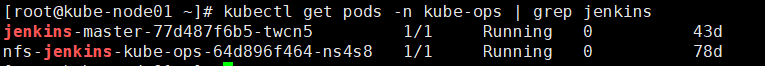

构建完成后

解释

由截图可以看出,此时当项目点击构建后,会根据Kubernetes插件的配置创建一个Jenkins Slave的POD,构建完成之后,会把这个动态创建的POD删除,这就完成了Jenkins动态构建了。

后记

如果在部署过程中遇到问题欢迎留言讨论,后面有空我再补充一些调试技巧。

希望本文可以帮助到大家,感谢阅读!

微信

微信

支付宝

支付宝